discovery 보드에서 jtag 부분에 stm32f103c8t6을 사용하고 있길래

[링크 : https://www.st.com/en/evaluation-tools/b-l072z-lrwan1.html]

저번에 구매했던 bluepill 보드를 어떻게 하면 jtag으로 개조할 수 있을까 하고 찾아보는데..

합법적이고 공식적인 방법은 없는듯.

[링크 : https://blog.naver.com/chandong83/222586172793]

[링크 : https://www.st.com/en/development-tools/stsw-link007.html] << 펌웨어 업로더

[링크 : https://www.st.com/en/development-tools/stsw-link004.html] << 펌웨어 업로더

[링크 : https://github.com/Krakenw/Stlink-Bootloaders] << 누군가 덤프해둔 stlink 펌웨어

| This Firmware is not open source. But it may be delivered in a few cases after request to an FAE or a marketing agent. |

[링크 : https://community.st.com/t5/stm32-mcus-boards-and-hardware/st-link-source-code/td-p/711010]

반대로.. jtag을 싸게 팔면 그걸 사서 bluepill 처럼 써버리는것도 방법이려나?

'embeded > Cortex-M3 STM' 카테고리의 다른 글

| stm32f103ret crc (0) | 2025.12.09 |

|---|---|

| stm32f103ret middleware - usb (0) | 2025.12.09 |

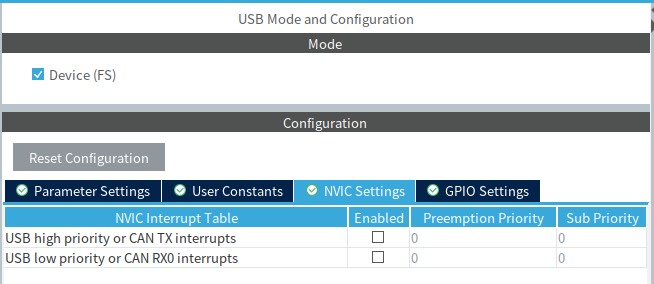

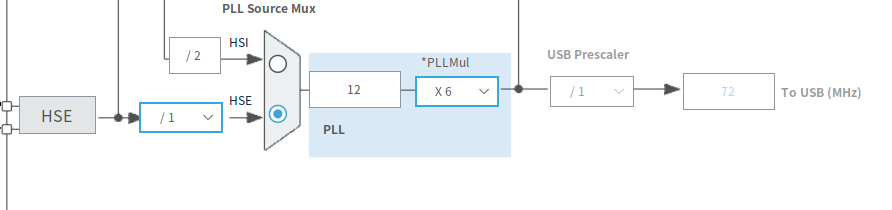

| stm32f103ret connectivity - usb (0) | 2025.12.09 |

| STSW-STM32084 / usb demo (0) | 2025.12.09 |

| stlink v2 클론 도착! (0) | 2025.11.26 |